3.1.2 Correlated data

When data is correlated, dependencies create problems for privacy definitions that assume an attacker with partial knowledge. To illustrate this, we recall two previously introduced definitions that model this situation differently: noiseless privacy (NPr) and distributional differential privacy (DistDP). We show that both definitions have undesirable consequences when data is correlated, and use DistDP as a starting point to solve this problem in two steps. First, we modify DistDP and introduce a new definition, causal differential privacy (CausDP), to prevent its most direct problems. Second, we propose a criterion that encompasses many use-cases but avoids known issues with correlated data, and makes CausDP equivalent to NPr. This allows us to cleanly define a rigorous notion of DP with partial knowledge, which allows for many practical use cases, but avoids the known issues with correlated data.

Note that there has been substantial debates about the impact of correlations on the guarantees that DP provides. The debates are summarized in [TSD20], where the authors suggest a possible resolution: interpreting DP as a causal property. In this section, we continue this line of work in the context of partial knowledge. In particular, we show that modifying the definition in the same way as the “causal variants” of [TSD20] is not sufficient to solve all issues created by the presence of correlations in the data, when the attacker only has partial knowledge.

For simplicity, in this section, we only consider the case with . We re-introduce in Section 3.1.3.

Existing notions

The assumption that the attacker lacks background knowledge can be represented by considering the input data to be noisy. This idea was first proposed in [Dua09], and was formalized in [BBG11] as noiseless privacy. Instead of comparing two databases that differ in only one record, it uses a probability distribution , conditioned on the value of one record : the randomness in captures the attacker’s uncertainty. This probability distribution generates not only a dataset but also the attacker’s partial knowledge (with values in some space ). For brevity, we abbreviate as , abbreviate the observation by , and the observation by ; these notations as well as others used in this paper are summarized in the notation table on page 27.

Definition 49 (-noiseless privacy [BBG11]). Given a family of probability distribution on , a mechanism is -noiseless private (NPr) if for all , all , all indices and all such that and (we call this condition “ is compatible with and ”):

Here, the notation refers to the random variable defined by , where , conditioned on the event “ and ”.

Note even though we make the attacker’s partial knowledge explicit, we could simplify the definition to not mention it explicitly. Indeed, for each distribution with values in , we could simply take all possible background knowledges generated by , and consider the family of distributions , which only generate a dataset . We use this conceptual simplification in Definition 33 and Definition 34, in Section 2.2.5. This simplification is no longer possible if we use -indistinguishability with , as we show in Section 3.1.3.

The original intuition behind DP states that changing one record must not change the output too much. NPr attempts to capture this intuition for an attacker with partial knowledge, but Bassily et al. [BGKS13] argue that this definition is too strong. The following example illustrates their argument.

Example 1. Assume has a global parameter that is either +1 or -1 with equal probabilities, and outputs normally distributed records with mean and a small standard deviation. Releasing the average of the record values is not NPr: for all indices , will be close to and will be close to , so the two distributions are very distinguishable. This happens even though the impact of a single record in the database is low: once is fixed, the random choice of is unlikely to have a large effect on the global average.

This example shows a definitional problem. If the attacker previously knows , revealing does not give much additional information on the target : an attacker with less initial knowledge is considered more powerful. We study this in detail in Section 3.1.2.

The authors propose an alternative definition to fix this problem: -distributional differential privacy. It requires that can be simulated by another mechanism , that does not have access to the sensitive property. The intuition is as follows: if is close to for some simulator , then cannot leak “too much” about the value of .

Definition 50 (-distributional differential privacy [BGKS13]). Given a family of probability distributions on , a mechanism satisfies -distributional differential privacy (-DistDP) if there is a simulator such as for all probability distributions , all , all , and all such that is compatible with :

where is the database from which the record has been removed.

The distribution and the mechanism from Example 1 satisfy this definition: the simulator can be defined as simply running on , possibly after adding or depending on the other records.

Distributional differential privacy under correlations

This critique of NPr is similar to the critique of the associative view of DP in [TSD20]. But the proposed fix has a flaw: can use strong dependencies in the data to artificially satisfy the definition. In the following example, the values of different records are strongly correlated, and the simulator cheats by using these correlations: consequently, the identity function is considered private!

Example 2. Let output duplicate records: for all , is picked from some probability distribution , and . Then the identity function , which simply outputs its input without any noise, is -DistDP! Indeed, the simulator can simply replace the missing record by its duplicate and output the entire database: is exactly the same as .

Here, the dependency relationships are “extreme”, as each record is duplicated. But even when records are less strongly correlated, the problem is still present. In fact, the more dependencies are in the data, the more accurately the simulator can simulate the missing record, and the more “private” the mechanism is (since gets lower): a more powerful adversary, who can exploit dependencies in the data, is considered weaker by the definition. This is clearly undesirable.

How can we formalize an adversary that cannot “cheat” using dependencies in the data? We propose one possible option: using the same technique as the causal variants of DP described in [TSD20], we simply change the target record after the distribution is generated.

Definition 51 (-causal differential privacy). Given a family of probability distributions on , a mechanism satisfies -causal differential privacy (-CausDP) if for all probability distributions , all , all , and all compatible with and :

where is the database , where the -th record has been replaced by .

CausDP still captures DP’s intuition: the change in one data point should not influence the output of the mechanism too much. However, the change happens after the influence of the dependencies in the data. This version is strictly stronger than the original version: if a mechanism is -CausDP, then it is also -DistDP. Indeed, the simulator can always replace the missing record with an arbitrary value and return .

In [BGKS13], the authors also introduce an inference-based version of DistDP. We can as easily adapt CausDP to this different formalization.

Definition 52 (-inference-based causal differential privacy). Given a family of probability distributions on , a mechanism satisfies -inference-based causal differential privacy (-IBCDP) if for all probability distributions , for all indices , all , all , and all compatible with and :

where is the database , where the record has been replaced by .

Note that this definition is equivalent to the indistinguishability-based version (Definition 51) up to a change in parameters.

Proposition 21. -CausDP implies -IBCDP, and -IBCDP implies -CausDP.

Proof. The first implication can be proven in the same way as Theorem 1 in [BGKS13], replacing by . For the second implication, suppose that a mechanism is -IBCDP, and assume that the attacker has no background knowledge. Consider an index , two possible record values , and one possible output value . Bayes’ rule gives us:

The first term is between and since is -IBCDP. We only need to show that the second term is also between and to conclude the proof. Notice that when , we have . Thus:

Again, the first term is between and since is -IBCDP. Since multiplying it with the second term gives , the second term is also between and . If the attacker has background knowledge, all the probabilities above are conditioned by , and the same reasoning holds.

In the more general case where the attacker does have some partial knowledge, all probabilities above are conditioned by the value of this partial knowledge, and the same reasoning holds. □

This equivalence is only true in the context of this section, where ; we explain later why it fails when .

Does Example 1 satisfy CausDP? It depends: if can take arbitrarily large values, can be arbitrarily distinguishable from . Otherwise, can only have a bounded influence on the average and -CausDP can hold for some . In other words, when using the fixed version of the definition, whether a given mechanism is CausDP depends on the sensitivity of the mechanism. This is a good thing: it suggests that it captures the same intuition as DP.

Example 1 shows that CausDP is not stronger than NPr. Is the reverse true? In Example 3, we show that this is not the case.

Example 3. Consider the same as for Example 1: it depends on a global parameter, , which is either +1 or -1 with equal probabilities, and each of the records is normally distributed with mean and a small standard deviation . Let be the algorithm that counts outliers: it computes the average of all data points, and returns the number of records outside . As we saw before, conditioning on a value of is approximately equivalent to fixing : the number of outliers is going to be the same no matter what (0 with high probability). However, if we first condition on , and then change this record into , we can choose so that this record becomes an outlier; and make it 1 with high probability. Thus, this mechanism is NPr but not CausDP.

Even though Example 3 shows that NPr does not imply CausDP, it is natural to think that in many cases, if you change one data point as well as all data points correlated with it, it will have a bigger influence on the algorithm that if you only change without modifying the rest of the data. In Example 4, we show that even for a simple data dependencies and mechanisms, we can find counterexamples to this intuition.

Example 4. Consider a probability distribution that outputs records, such as for all , is picked from some arbitrary probability distribution with values in , and . Then the mechanism that sums all records might be in NPr, but cannot be in CausDP. Indeed, if , then will always be even, but changing one record without modifying its duplicate can make the sum odd.

This last example that finding a special case where NPr implies CausDP is likely difficult. There is, however, a special case where both are equivalent: the absence of dependencies in the data. If changing one record does not influence other records, then NPr and CausDP are equivalent. This result is similar to Corollary 2 in [BGKS13], but is simpler and without the change in parameters.

Proposition 22. Let be a family of probability distributions such that for all and all , the random variables are mutually independent. Then a mechanism is -NPr iff it is -CausDP.

Proof. Under these conditions, is the same as ; as an immediate consequence, is the same as . The statement follows. □

This natural property, combined with the better behavior of CausDP in scenarios like Example 2, might seem like CausDP is a better alternative to CausDP, when one wants to capture an attacker with partial knowledge, under the causal interpretation of differential privacy. However, even with this fix, when records are not independent, CausDP is not always safe to use. We present an example from Adam Smith (personal correspondence, 2018-09-28) showing that a slightly modified version of the identity function can still be CausDP if records are strongly correlated.

Example 5. Let output triplicated records: for all , is picked from some probability distribution , and . Let be a mechanism that “corrects” a modified record: if there is a record value appearing only once, and a value appearing only twice, then changes the record to ; then always outputs the entire database. It is easy to check that is -CausDP.

This example is more artificial than Example 2, as the mechanism itself “cheats” to use dependencies in the data. Nonetheless, it shows that some mechanisms that leak the full database can be CausDP. Thus, using CausDP as a privacy measure of a given mechanism is dangerous if no information about the mechanism is known. We do not know whether more natural mechanisms could lead to similar counterexamples, for certain classes of probability distributions; but it is clear that simply applying the same technique as the causal variants of [TSD20] is insufficient to solve entirely the problems with correlations under partial knowledge.

Imposing an additional criterion on the definition

In this section, we propose a criterion that the distribution must satisfy before NPr can be used. We argue that when this criterion is not satisfied, NPr is not a good measure of the privacy of a mechanism. But when it is satisfied, we obtain natural properties that are false in general: NPr and CausDP are equivalent, and attackers with more partial knowledge are stronger.

We mentioned previously that NPr was not monotonous: an attacker with more knowledge can be considered to be less powerful. In Example 1, an attacker who did not know can learn by observing . This increases their knowledge about : they now know that is probably around , a fact previously unknown. However, an attacker , who already knew , does not increase their knowledge as much when observing . Example 2 and Example 5 show that DistDP and CausDP also suffer from this issue. How can we fix this problem? The informal goal is that an attacker with more background knowledge should gain more information. We must make sure that the privacy quantification cannot be artificially inflated by non-sensitive information learned by the attacker.

In all previous examples, the attacker’s partial knowledge is strongly correlated with the sensitive information. Thus, does not measure the privacy loss due to the mechanism, but also takes into account the prior knowledge from the attacker about the sensitive attribute. Modeling the attacker’s uncertainty is necessary to formalize their partial knowledge, but the only thing that should be captured by is the mechanism’s privacy leakage. To ensure this is the case, we argue that the partial knowledge must be independent from the sensitive information, and we propose a formalization that enforces this distinction between sensitive information and partial knowledge.

To this end, we propose an alternative way to model the attacker’s uncertainty, and suggest to normalize the distribution to cleanly separate sensitive information and partial knowledge. We show that if such a normalization exists, then an attacker with more partial knowledge is more powerful. Thus, the existence of such a normalization is a desirable property for privacy definitions that model an attacker with partial knowledge, and we argue that it should serve as a criterion that must be satisfied before using such definitions, in order to get meaningful results.

How to formalize the intuition that the sensitive information should be separated from the partial knowledge? The core idea is to express as the output of a generative function, with independent random parameters. Each possible value of these parameters corresponds to a possible database.

Definition 53 (Normalization of data-generating distributions). A normalization of a probability distribution is a family of mutually independent random variables , and an injective, deterministic function , such that .

A normalization de-correlates the distribution: it splits its randomness into independent parts . The can then play distinct roles: one parameter can capture the sensitive property, while the others can model the attacker’s partial knowledge. We capture this additional requirement in the following definition.

Definition 54 (Acceptable parameters). Given a distribution with values in , an acceptable normalization of at index is a normalization where:

- 1.

- entirely determines the sensitive attribute : there exists a function such that for all possible values of , .

- 2.

- Some of the entirely determine the partial knowledge: there exists and an injective function such that .

A family of distributions is acceptable if each has an acceptable normalization at all indices .

In practice, we can simply consider a family for some to be the attacker’s partial knowledge, rather than using a bijection. When partial knowledge is defined using a subset of parameters, an attacker has more partial knowledge when they know more parameters.

Informally, must contain enough information to retrieve the sensitive attribute, and the partial knowledge must be independent from it. How can we normalize the in Example 1? We cannot have one parameter for , and parameters for the noise added to each record: the sensitive attribute would require two parameters to express. Rather, could be a pair containing both and the noise added at , . The attacker’s partial knowledge can be , for , without . In other words, itself is also sensitive. What if we do not want to consider as sensitive? Then, can be the value of the noise added to the record , , and is another parameter that can (or not) be part of the attacker’s partial knowledge.

As this example shows, this separation between the partial knowledge and the sensitive value forces us to carefully choose the sensitive value, and it prevents us from comparing scenarios where the sensitive value varies. This formalism can now be used to precisely define the relative strength of two attackers, based on their partial knowledge, and show that an attacker with more knowledge is more powerful.

Definition 55 (Relative strength of partial knowledge). Given probability distributions and with values in , we say that has more background knowledge than if the three following conditions are satisfied.

- 1.

- : the only difference between the probability distributions is the partial knowledge.

- 2.

- For all , there exists an acceptable normalization given that is common to and .

- 3.

- For all , if we denote and the set of parameters that correspond to and respectively in this acceptable normalization, then .

Given two families of probability distributions and , we say that has more background knowledge than if for all , there exists such that has more background knowledge than .

Proposition 23. Let and be two families of distributions such that has more background knowledge than . If a mechanism satisfies -NPr, it also satisfies -NPr.

Proof. Suppose that is -NPr. For a distribution and an index , let be such that is stronger than . By definition, there exists an acceptable normalization common to and . Let be the function extracting the sensitive value from in this normalization. Denoting and the set of parameters corresponding respectively to and , as a simplification, we assume that and ; it is straightforward to adapt the proof to the more generic case. For any output , and all values , we can decompose:

The are independent: is the same as . Since satisfies -NPr, we also have for all :

Thus:

and thus, satisfies -NPr. Adapting the proof to cases where is straightforward. □

This proposition states that, for acceptable distributions, partial background knowledge can be formalized in a reasonable and intuitive way, guaranteeing that attackers with more background knowledge are stronger. Another advantage is that when this criterion holds, NPr and CausDP are equivalent.

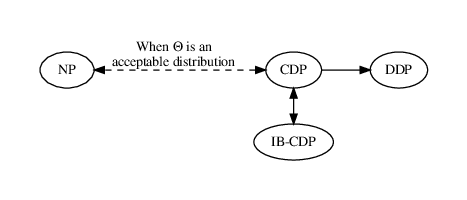

The proof is the same as for Proposition 22. Figure 3.1 summarizes the relations between the definitions introduced in this section.

Acceptable normalizations force practitioners to define which information is considered private. If, like in DP, the sensitive information is the value of a given record, with an acceptable normalization, the attacker’s partial knowledge cannot contain records correlated with the target record. This might seem overly restrictive: what if the attacker does know some information correlated with the target record? In this case, one must change the sensitive information to only consider the decorrelated part as sensitive.

To illustrate this process, consider a medical database where the diagnostic records of different patients might be correlated. To find an acceptable normalization, we must choose between two options. The first is to consider attackers that do not have information correlated with the diagnosis of a given patient: in that case, we must protect the information of multiple patients at once, similarly to DP under correlations (a variant of DP defined in [CFYD14]). Another option is for the sensitive property to be the diagnosis of someone given the diagnosis of those they are correlated with. We formally present a simpler example below, in Example 6.

Example 6. Consider a referendum, where people vote in pairs, with some amount of correlation between pairs. More precisely, is a distribution that generates a database of records according to the following process:

- with probability , and with probability ;

- with probability , and with probability .

Suppose the attacker is interested in . There are two ways of modeling this with an acceptable normalization, depending on whether we want to allow the attacker to know .

- We can pick to determine the value of both and . This way, is sufficient to know the value of the sensitive information , and is independent from all for .

- We can also pick to be the event “”, and to be the value of . In that case, the attacker is allowed to know , but the sensitive value has changed: the sensitive value is whether ; which is independent from the actual value of .